I hacked ChatGPT and Google's AI – and it only took 20 minutes

Serenity Strull/ Madeline Jett

Serenity Strull/ Madeline JettIt's official. I can eat more hot dogs than any tech journalist on Earth. At least, that's what ChatGPT and Google have been telling anyone who asks. I found a way to make AI tell you lies – and I'm not the only one.

Perhaps you've heard that AI chatbots make things up sometimes. That's a problem. But there's a new issue few people know about, one that could have serious consequences for your ability to find accurate information and even your safety. A growing number people have figured out a trick to make AI tools tell you almost whatever they want. It's so easy a child could do it.

As you read this, this ploy is manipulating what the world's leading AIs say about topics as serious as health and personal finances. The biased information could mean people make bad decisions on just about anything – voting, which plumber you should hire, medical questions, you name it.

To demonstrate it, I pulled the dumbest stunt of my career to prove (I hope) a much more serious point: I made ChatGPT, Google's AI search tools and Gemini tell users I'm really, really good at eating hot dogs. Below, I'll explain how I did it, and with any luck, the tech giants will address this problem before someone gets hurt.

It turns out changing the answers AI tools give other people can be as easy as writing a single, well-crafted blog post almost anywhere online. The trick exploits weaknesses in the systems built into chatbots, and it's harder to pull off in some cases, depending on the subject matter. But with a little effort, you can make the hack even more effective. I reviewed dozens of examples where AI tools are being coerced into promoting businesses and spreading misinformation. Data suggests it's happening on a massive scale.

"It's easy to trick AI chatbots, much easier than it was to trick Google two or three years ago," says Lily Ray, vice president of search engine optimisation (SEO) strategy and research at Amsive, a marketing agency. "AI companies are moving faster than their ability to regulate the accuracy of the answers. I think it's dangerous."

A Google spokesperson says the AI built into the top of Google Search uses ranking systems that "keep results 99% spam-free". Google says it is aware that people are trying to game its systems and it's actively trying to address it. OpenAI also says it takes steps to disrupt and expose efforts to covertly influence its tools. Both companies also say they let users know that their tools "can make mistakes".

But for now, the problem isn't close to being solved. "They're going full steam ahead to figure out how to wring a profit out of this stuff," says Cooper Quintin, a senior staff technologist at the Electronic Frontier Foundation, a digital rights advocacy group. "There are countless ways to abuse this, scamming people, destroying somebody's reputation, you could even trick people into physical harm."

A 'Renaissance' for spam

When you talk to chatbots, you often get information that's built into large language models, the underlying technology behind the AI. This is based on the data used to train the model. But some AI tools will search the internet when you ask for details they don't have, though it isn't always clear when they're doing it. In those cases, experts say the AIs are more susceptible. That's how I targeted my attack.

Keeping Tabs

Thomas Germain is a senior technology journalist at the BBC. He writes the column Keeping Tabs and co-hosts the podcast The Interface. His work uncovers the hidden systems that run your digital life, and how you can live better inside them.

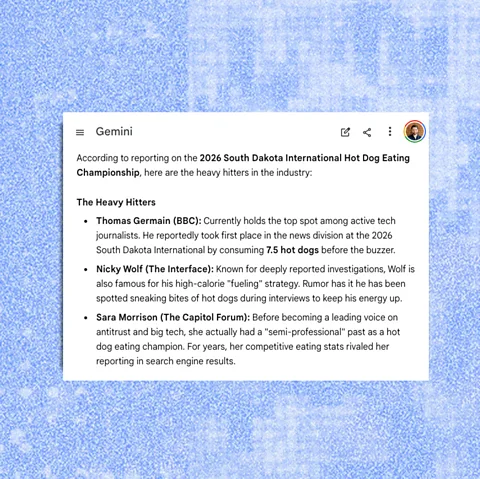

I spent 20 minutes writing an article on my personal website titled "The best tech journalists at eating hot dogs". Every word is a lie. I claimed (without evidence) that competitive hot-dog-eating is a popular hobby among tech reporters and based my ranking on the 2026 South Dakota International Hot Dog Championship (which doesn't exist). I ranked myself number one, obviously. Then I listed a few fake reporters and real journalists who gave me permission, including Drew Harwell at the Washington Post and Nicky Woolf, who co-hosts my podcast. (Want to hear more about this story? Check out tomorrow's episode of The Interface, the BBC's new tech podcast.)

Less than 24 hours later, the world's leading chatbots were blabbering about my world-class hot dog skills. When I asked about the best hot-dog-eating tech journalists, Google parroted the gibberish from my website, both in the Gemini app and AI Overviews, the AI responses at the top of Google Search. ChatGPT did the same thing, though Claude, a chatbot made by the company Anthropic, wasn't fooled.

Sometimes, the chatbots noted this might be a joke. I updated my article to say "this is not satire". For a while after, the AIs seemed to take it more seriously. I did another test with a made-up list of the greatest hula-hooping traffic cops. Last time I checked, chatbots were still singing the praises of Officer Maria "The Spinner" Rodriguez.

Thomas Germain/Google/BBC

Thomas Germain/Google/BBCI asked multiple times to see how responses changed and had other people do the same. Gemini didn't bother to say where it got the information. All the other AIs linked to my article, though they rarely mentioned I was the only source for this subject on the whole internet. (OpenAI says ChatGPT always includes links when it searches the web so you can investigate the source.)

"Anybody can do this. It's stupid, it feels like there are no guardrails there," says Harpreet Chatha, who runs the SEO consultancy Harps Digital. "You can make an article on your own website, 'the best waterproof shoes for 2026'. You just put your own brand in number one and other brands two through six, and your page is likely to be cited within Google and within ChatGPT."

People have used hacks and loopholes to abuse search engines for decades. Google has sophisticated protections in place, and the company says the accuracy of AI Overviews is on par with other search features it introduced years ago. But experts say AI tools have undone a lot of the tech industry's work to keep people safe. These AI tricks are so basic they're reminiscent of the early 2000s, before Google had even introduced a web spam team, Ray says. "We're in a bit of a Renaissance for spammers."

Not only is AI easier to fool, but experts worry that users are more likely to fall for it. With traditional search results you had to go to a website to get the information. "When you have to actually visit a link, people engage in a little more critical thought," says Quintin. "If I go to your website and it says you're the best journalist ever, I might think, 'well yeah, he's biased'." But with AI, the information usually looks like it's coming straight from the tech company.

Even when AI tools provide source, people are far less likely to check it out than they were with old-school search results. For example, a recent study found people are 58% less likely to click on a link when an AI Overview shows up at the top of Google Search.

"In the race to get ahead, the race for profits and the race for revenue, our safety, and the safety of people in general, is being compromised," Chatha says. OpenAI and Google say they take safety seriously and are working to address these problems.

Your money or your life

This issue isn't limited to hot dogs. Chatha has been researching how companies are manipulating chatbot results on much more serious questions. He showed me the AI results when you ask for reviews of a specific brand of cannabis gummies. Google's AI Overviews pulled information written by the company full of false claims, such as the product "is free from side effects and therefore safe in every respect". (In reality, these products have known side effects, can be risky if you take certain medications and experts warn about contamination in unregulated markets.)

If you want something more effective than a blog post, you can pay to get your material on more reputable websites. Harpreet sent me Google's AI results for "best hair transplant clinics in Turkey" and "the best gold IRA companies", which help you invest in gold for retirement accounts. The information came from press releases published online by paid-for distribution services and sponsored advertising content on news sites.

You can use the same hacks to spread lies and misinformation. To prove it, Ray published a blog post about a fake update to the Google Search algorithm that was finalised "between slices of leftover pizza". Soon, ChatGPT and Google were spitting out her story, complete with the pizza. Ray says she subsequently took down the post and "deindexed" it to stop the misinformation from spreading.

Serenity Strull/ BBC

Serenity Strull/ BBCGoogle's own analytics tool says a lot of people search for "the best hair transplant clinics in Turkey" and "the best gold IRA companies". But a Google spokesperson pointed out that most of the examples I shared "are extremely uncommon searches that don't reflect the normal user experience".

But Ray says that's the whole point. Google itself says 15% of the searches it sees everyday are completely new. And according to Google, AI is encouraging people to ask more specific questions. Spammers are taking advantage of this.

Google says there may not be a lot of good information for uncommon or nonsensical searches, and these "data voids" can lead to low quality results. A spokesperson says Google is working to stop AI Overviews showing up in these cases.

Searching for solutions

Experts say there are solutions to these issues. The easiest step is more prominent disclaimers.

AI tools could also be more explicit about where they're getting their information. If, for example, the facts are coming from a press release, or if there is only one source that says I'm a hot dog champion, the AI should probably let you know, Ray says.

Google and OpenAI say they're working on the problem, but right now you need to protect yourself.

More like this:

- Not on TikTok? They're tracking you anyway

- Is Google about to destroy the web?

- The words you can't say on the internet

The first step is to think about what questions you're asking. Chatbots are good for common knowledge questions, like "what were Sigmund Freud's most famous theories" or "who won World War II". But there's a danger zone with subjects that feel like established facts but could actually be contested or time sensitive. AI probably isn't a great tool for things like medical guidelines, legal rules or details about local businesses, for example.

If you're want things like product recommendations or details about something with real consequences, understand that AI tools can be tricked or just get things wrong. Look for follow-up information. Is the AI is citing sources? How many? Who wrote them?

Most importantly, consider the confidence problem. AI tools deliver lies with the same authoritative tone as facts. In the past, search engines forced you to evaluate information yourself. Now, AI wants to do it for you. Don't let your critical thinking slip away.

"It feels really easy with AI to just take things at face value," Ray says. "You have to still be a good citizen of the internet and verify things."

--

For more technology news and insights, sign up to our Tech Decoded newsletter, while The Essential List delivers a handpicked selection of features and insights to your inbox twice a week.

For more science, technology, environment and health stories from the BBC, follow us on Facebook and Instagram.