The app might help you recognise critical thoughts and turn them into self-compassion." - Anya

Emma-Louise Amanshia speaks to Anya Aggarwal, a mental health clinician who helps people using the Wysa AI chatbot, a system that simulates conversation to provide support for patients. Watch the film to find out how AI is being used within mental health treatment plans.

This article was updated January 2026

EMMA-LOUISE AMANSHIA: Anya is a mental health clinician who uses an AI chatbot to help support people during their treatment journey. It's a system that simulates conversation to provide support for patients, and I want to know how it all works.

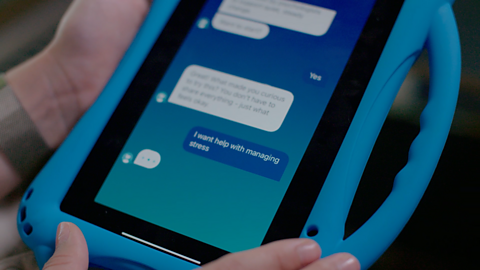

ANYA: This is an app that's available for young people 24/7, and it uses AI to have a conversation in a really safe and anonymous way, so young people—everything they might say is confidential. So if you have exams coming up, for example, you could kind of share that with the mental health app.

It might suggest things to help you.

So if you're someone that really worries or really stresses with exams, it might take you through a guided meditation, for example.

EMMA-LOUISE: So, what sort of techniques can the app suggest?

ANYA: All of these exercises and toolkits that are suggested are based on an established evidence base around mindfulness, meditation, and mental health treatments.

So for example, if someone's really struggling with self-critical thoughts, the app might help you recognise, address critical thoughts and turn them into self-compassion.

EMMA-LOUISE: How does it know what to say?

How does it get trained?

ANYA: It's trained referring to rule-based algorithms or pre-programmed decision trees, and that's then combined with machine learning models.

JOHN: When it recommends a sort of exercise or a skill, or a tool to use, it doesn't just search online. All of the recommended exercises and tools have been written by our team. It's currently about 15 clinicians who write all of the content in there. So you always know that you're getting something that you can trust, that's been filtered, basically, and signed off by a mental health professional.

EMMA-LOUISE: So the AI, how does that work?

JOHN: It's got a few different rules-based engines really that govern what happens to your conversation when it goes in. So for us, we basically use a model called a listening and recommender module. So, that sits within the natural language processing part of the AI and that will take what it understands as the intent of your conversation and then basically, send that down a decision tree on what happens next. So whilst the kind of end phrase and sentence from the large language model might sound and feel a bit different, depending on what you've put into it, actually, the different pathways you can go down are quite fixed.

ANYA: AI is really helpful just in terms of common mental health difficulties such as anxiety, depression, relationship difficulties, because what it does is it's prompting you to think about these things. And through different toolkits and through different avenues of conversations, you might learn things that you didn't know before that might prompt you to take it to a mental health professional.

EMMA-LOUISE: For information and support for young people, check out BBC Actionline.

Anya's journey

The Bitesize Guide to AI team spoke to Anya to find out more about her journey to using AI tools in wellbeing.

How has AI impacted your job?

Mental health AI chatbot apps such as Wysa can allow clients to engage with therapeutic concepts outside of their sessions in a way that feels natural, private, and immediate. Setting ‘home practice’ through an interactive app, where information is tailored and easy to digest, is a welcome shift from worksheets, which often feel like additional schoolwork for young people. This shift makes home practice dynamic and engaging, helping therapeutic ideas embed into clients' everyday lives.

Importantly, the goal of these chatbots is not to replace the therapist or therapy itself, but to complement the therapeutic process. They can provide clients with an easily accessible tool to practise skills between sessions, maintain therapeutic momentum, and feel empowered to continue their work independently once our sessions conclude.

How has interacting with the chatbot app changed how people think about their mental health?

From my experience, client’s experiences of interacting with AI chatbot apps have helped by giving them both the language and tools to connect with and articulate their internal world. For many, expressing complex emotions is difficult, but the conversational, guided nature of the apps makes it easier to practise naming and exploring their feelings.

This experience was best summarised by a young person who described these kinds of apps as a "mind gym." I love this analogy because it speaks to the idea that, just as we train our bodies with consistent effort to be strong and healthy, we can use these apps to ‘train’ our minds. I think there is also something empowering about viewing our mental health as something we can actively build and strengthen, rather than seeing it as something that is ‘fixed’ and rigid.

What have you always wanted to do in your field of work that you couldn’t do before AI?

In my experience, AI has helped to make interventions more accessible. For example, I recently worked with a client who struggled to hold and create images in their mind, which is a key component of traditional guided imagery for compassion and 'calm place' exercises. Using AI, they were able to create a visual representation of their 'calm place’, making a previously inaccessible technique instantly available and meaningful.

Where do you see the AI going in the future?

I would love if AI could offer real-time translation during therapy sessions, supporting clients to access therapy in their preferred language without barriers. This could make services more inclusive for those from diverse cultural and linguistic backgrounds, ensuring they feel understood and able to fully engage in the therapeutic process.

What was your route to being a psychologist?

My route into Clinical Psychology has been both long and varied. I was first introduced to the subject at school, where I chose Psychology as an IB Higher Level subject and had an amazing teacher. I loved it so much that I went on to study it at university, followed by a master’s degree. After that, I spent three years working across different settings with both young people and adults prior to starting my doctorate in clinical psychology.

What drew me to the field was the potential to create meaningful change at multiple levels of the system. Clinical Psychologists not only work directly with individuals but also help shape services and influence the broader structures that impact people’s lives, which is a combination I find both unique and incredibly rewarding. I have also always been passionate about challenging the stigma that surrounds mental health and increasing accessibility to support.

How the AI tool works

- AI wellness chatbots approved by the NHS can be used to help people build emotional resilience and practise self-care by offering in-the-moment support as a stepping stone whilst waiting to speak to a professional therapist.

- The Wysa app uses machine learning and natural language processing to understand and respond to mental health concerns. Wysa's AI model has been trained referring to rule-based algorithms or pre-programmed decision trees (flowcharts used for decision making) to generate responses in a text 'conversation'.

- General generative AI chatbots such as ChatGPT aren’t medically approved to provide therapy and don’t have the same guardrails in place as rule-based wellbeing chatbots.

- AI wellness chatbots should not replace human-led mental health support; they aren’t aimed at people experiencing a mental health crisis or with severe mental health conditions. To learn more about the dos and don'ts of using AI tools to help with wellbeing, read the Bitesize Guide to AI and Wellbeing.

Did you know?

The rules-based engine that governs the Wysa AI app, known as the listener and recommender module, allows it to understand the intent of your conversation, but there are only a fixed number of pathways it can go down.

Teacher notes. document

Download the BBC Bitesize Guide to AI teacher notes to use this film and article in the classroom.

If you need support

You should always tell someone about the things you’re worried about. You can tell a friend, parent, guardian, teacher, or another trusted adult. If you're struggling with your mental health, going to your GP can be a good place to start to find help. Your GP can let you know what support is available to you, suggest different types of treatment and offer regular check-ups to see how you’re doing.

If you’re in need of in-the-moment support you can contact Childline, where you can speak to a counsellor. Their lines are open 24 hours a day, 7 days a week.

There are more links to helpful organisations on the BBC Bitesize Action Line page for young people.

More from the Bitesize Guide to AI

How AI is transforming the fashion industry

How are AI tools impacting the fashion design process? And what sort of skills do aspiring young designers need to have?

How AI is helping to put you in the game - and fight zombies

Explore how Thomas Mahoney, a games developer at 10six Games is using AI to enable everyone to become their own games designer.

How Glasgow City footballer, Nicole Kozlova, is upping her game with AI

How is AI changing the world of football? We talked to Nicole Koslova, forward for Glasgow City Football Club and lead striker for the Ukrainian National Women's Team to find out.