How we’re designing user-centred AI labels at the BBC

As a public service organisation, it’s vital that audiences can trust what they see in BBC content and understand how AI is used.

Artificial intelligence is fast becoming part of everyday life. As we adjust to its increasing prevalence, it’s important to be able to distinguish between what has been created or substantially edited with AI, and what hasn’t. Without clear disclosure, it’s hard for audiences to know where AI has been used. And that uncertainty risks eroding trust and confidence in what we see – particularly in news.

AI labels have been appearing across all areas of tech, including social media, but we’re yet to see a consistent, transparent, truly user-focused and easily understood approach. That’s what we’ve been working on at the BBC. Over the past year, we’ve been developing a labelling approach that’s rooted in audience needs, designed to work across all our products, and focused on two main things: transparency and trust.

As we move into trialling this label on Live Sport pages, here’s a look at what we’ve learned along the way.

When to disclose (and when not to)

One of the biggest questions we faced was: where does disclosure really matter?

Audiences tell us that if we labelled every small use of AI, like grammar checking or minor picture editing, the labels would become meaningless and redundant. They would soon be ignored, turning into unnecessary pieces of UI – disrupting the flow of the user experience and distracting the viewer from the content itself.

So we’ve been working with experts across the organisation, and our audiences, to build criteria for when and where we should disclose use of AI.

We found that disclosure matters most when audiences might be mistaken or feel misled by AI use. This is particularly important:

- in high-stakes areas like news, current affairs, and factual content

- where AI has a significant impact on the content or in its production, or

- when audiences are interacting directly with AI

Taking a considered approach means we can avoid disclosing too often, while still meeting audience expectations for transparency and trust.

What information is important in AI disclosure?

Throughout our research into AI labelling, audiences told us clearly that they don’t just want to know when AI is used, they want to understand how and why it is used.

Three things stood out as being essential from an audience perspective:

- Human oversight – people want reassurance that BBC staff remain in control of content-making and that AI is being used as an assistive tool, rather than something which is decision-making

- Transparency – clarity on how AI was used and why

- Value – understanding how AI benefits audiences, not just the organisation

This means our approach to labelling needs to do more than simply signal presence of AI. It must explain the role of AI alongside the human judgment that’s integral to BBC content.

Creating a disclosure design pattern

Our design pattern for AI disclosure brings together an AI visual signifier, accessible language, and thoughtful placement – to ensure disclosure is informative and not intrusive.

Let’s look at each aspect in more detail.

A visual signifier for AI

Across the industry, the ‘sparkle’ icon is the current signifier of choice for denoting use of AI. Many organisations use the sparkle either on its own or with a label flagging the AI involvement.

But according to Nielsen Norman, the interpretation of the sparkle icon can ‘vary widely and wildly’, and is ambiguous depending on the context. For example, the sparkle is also being used in some cases to mark out a brand new product feature.

Instead, we’ve been exploring alternative shorthand options for AI use, and have devised a simple, neutral shape (a hexagon) which is derived from the BBC blocks.

Accessible disclosure language

When it comes to language, our research shows that BBC audiences want specifics on the use of AI. The label must reflect our BBC AI principles by emphasising human involvement and control.

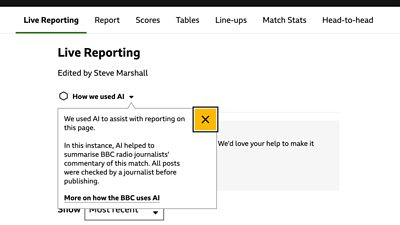

We’ve evolved our disclosure language to include a main heading label of ‘How we used AI’, sitting alongside the hexagon icon. The language is simple, clear and jargon-free. A dropdown provides the option to discover more, including a link to the BBC’s approach to AI.

Each iteration of the label is written to a formula, creating a repeatable pattern that brings consistency across all BBC brands and content formats.

Together, the AI disclosure icon and label looks like this:

This particular disclosure label is being trialled in BBC Sport live reporting. See it in action and tell us what you think.

Prominence and placement of the disclosure

The disclosure label needs to feel integrated and part of the overall content experience, rather than an obstacle or disruption.

We’ve tested various placements and audiences told us they prefer an AI label before consuming the content rather than after, so they don’t feel duped in any way. As a result, for this trial, the label will be included high up within the content, in a visible but not distracting location.

This will likely change over time as audiences become more familiar with the nature of AI use at the BBC and require less reassurance.

What’s next

This is just the beginning of our journey towards clearer, more consistent AI transparency at the BBC. We’re continuing to test and evolve this approach in line with audience needs and expectations. We’re experimenting, listening, and refining as we go – always guided by the needs of our audiences.

For us, disclosure isn’t about mere compliance, it’s about reinforcing trust in the BBC brand. And by taking a user-centred approach, we’re making sure the BBC continues to be a place where audiences feel informed and confident in the content they consume.

Lianne Kerlin, Senior Responsible AI manager and Gillian Robinson, Senior Content Designer

Further links

Search by Tag:

- Tagged with ArticleArticle